Part Two of A Three Part “How Autonomous Vehicles Work” Series

Situational awareness is the key to good driving. To navigate their cars to a desired destination, drivers need to know their locations and observe their surroundings in real time. These observations allow the driver to take actions instinctively such as accelerate or brake, change lanes, merge onto the highway, and maneuver around obstacles and objects.

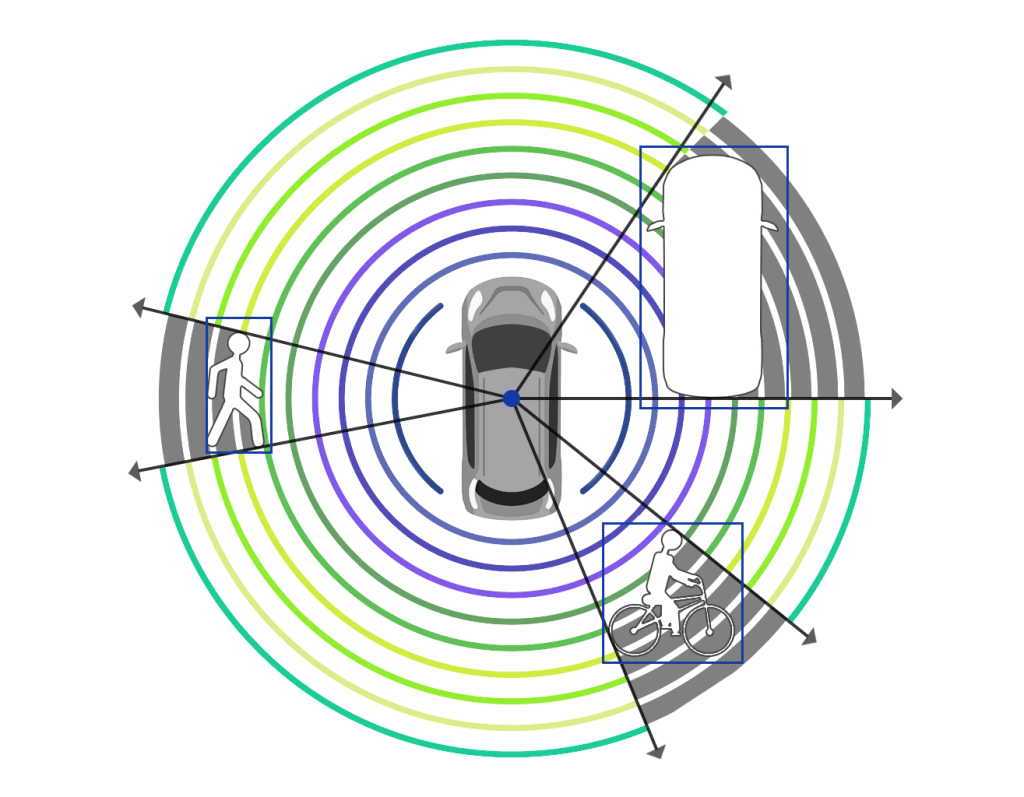

Fully autonomous vehicles (AVs) work in much the same way, except they use sensor and GPS technologies to perceive the environment and plan a path to the desired destination. These technologies work together to establish where the car is located and the correct route to take. They continuously determine what is going on around the car, locating the position of people and objects near the vehicle, and assessing the speed and direction of their movements.

This constant flow of information is fed into the car’s onboard computer system, which decides the safest way to navigate safely within its surroundings. To better understand how sensor technologies in autonomous cars work, let’s examine how these vehicles perceive their location and environment to identify and avoid objects in their pathways.

Precisely Measuring the Vehicle’s Location and Surroundings

Sensor technologies provide information about the surrounding environment to the vehicle’s computer system, allowing the vehicle to move safely in our three-dimensional world. These sensors gather data that describe a car’s changes in position and orientation.

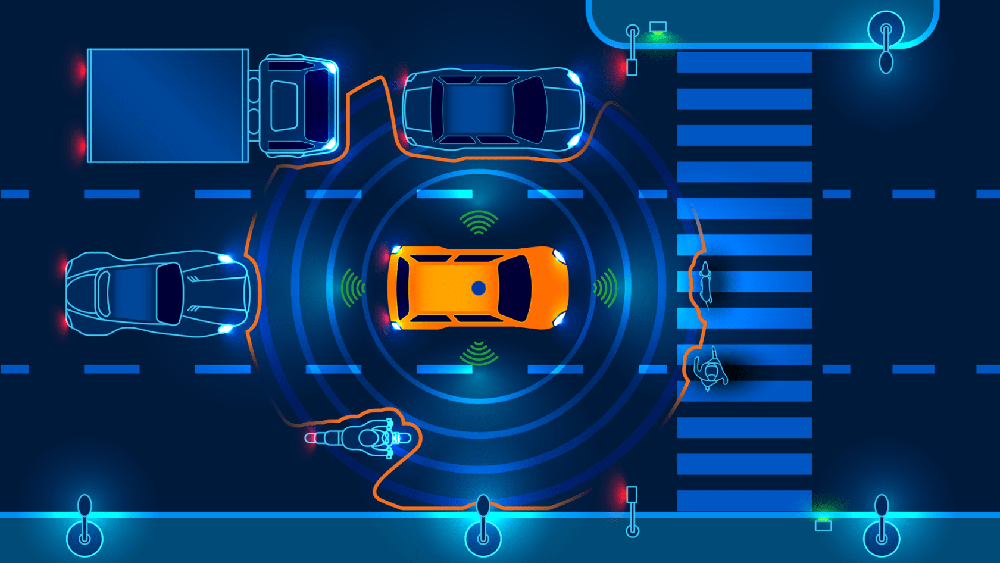

Autonomous vehicles utilize high-definition maps that guide the car’s navigation system. Recent developments in AV technology aim to generate and update these maps in real time. While this is still a work in progress, it is necessary because the conditions of our roadways are not static. Congestion, accidents, and construction complicate real-life movement on our streets and highways. On-vehicle sensing technologies, such as LiDAR, cameras, and radar, perceive the environment in real time to provide accurate data of these ever-changing roadway situations.

The real-time maps that these sensors produce are often highly detailed, including road lanes, pavement edges, shoulders, dividers, and other critical information. These maps include additional information, such as the locations of street lights, utility poles, and traffic signs. The vehicle must be aware of each of these features to navigate the roadway safely.

Sensing technologies address other critical driving requirements. For instance, Velodyne LiDAR sensors enable autonomous vehicles to have a 360° view so the entire environment around the vehicle can be seen while operating. Having a wide field of view is particularly important in navigating complicated situations, such as a high-speed merge onto a highway.

Detecting and Avoiding Objects

Sensor technologies provide onboard computers with the data they need to detect and identify objects such as vehicles, bicyclists, animals, and pedestrians. This data also allows the vehicle’s computer to measure these objects’ locations, speeds and trajectories.

A useful example of object detection and avoidance in autonomous vehicle testing is a dangerous tire fragment on the freeway. This example is often cited because it causes trouble for all sensors. Tire fragments are not usually large enough to spot easily from a long distance and they are often the same color as the road surface. AV sensor technology must have high enough resolution to detect accurately the fragment’s location on the roadway. This requires distinguishing the tire from the asphalt and determining that it is a stationary object (rather than something like a small, moving animal.)

In this situation, the vehicle not only needs to detect the object but also classify it as a tire fragment which must be avoided. Then the vehicle must determine the right course of action, such as to change lanes to avoid the tire fragment while not hitting another vehicle or object. To give the vehicle adequate time to change its path and speed, these steps must all happen in less than a second. Again, these decisions made by the vehicle’s onboard computer depend on accurate data provided by the vehicle’s sensors.

“THE DECISIONS MADE BY THE VEHICLE’S ONBOARD COMPUTER DEPEND ON ACCURATE DATA PROVIDED BY THE VEHICLE’S SENSORS.”

A Closer Look at Sensor Technologies

Sensor technologies perceive a car’s environment and provide the onboard map with rich information about current roadway conditions. To build redundancy into self-driving systems, automakers utilize an array of sensors, including LiDAR, radar, and cameras.

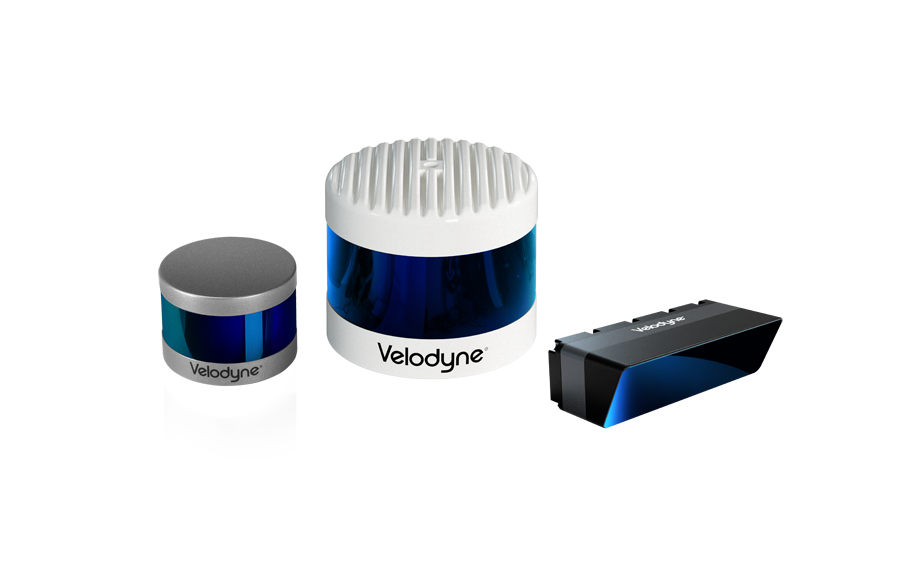

LiDAR is the core sensor technology for autonomous vehicles, with Velodyne LiDAR sensors delivering the range, resolution, and accuracy required by the most advanced autonomous vehicle programs in the world. LiDAR sensors reflect light off surrounding objects at an enormously high rate, with some LiDAR sensors producing millions of laser pulses each second. By measuring the time required for each pulse to “bounce off” of an object and return to the sensor, and multiplying this time by the speed of light, the distance of the object can be calculated. Gathering this distance data at an extremely high rate produces a “point cloud,” or a 3D representation of the sensor’s surroundings, which locates the vehicle precisely – within centimeters – in the map.

Radar has long-range detection capabilities and can track the speed of other vehicles on the road. However, radar is not equipped with the resolution or accuracy to provide the detailed, precise information supplied by LiDAR.

Cameras can identify colors and fonts, so they are capable of reading traffic signals, road signs, and lane markings. However, unlike LiDAR, cameras rely on ambient light conditions to operate and are hindered in low light conditions and when hit with direct light. In contrast, because LiDAR produces its own light source, it can perform well whether it is day or night.

“LIDAR IS THE CORE SENSOR TECHNOLOGY FOR AUTONOMOUS VEHICLES”

Since LiDAR meets more of the requirements for AVs but is not as well-known as camera and radar technologies, our next post will dive more deeply into LiDAR sensor technology and its benefits to AVs.

How Autonomous Vehicles Work

Part 1: How They Will Improve the Cost, Convenience, and Safety of Driving

Part 2: How Autonomous Vehicles Perceive and Navigate Their Surroundings

Part 3: How LiDAR Technology Enables Autonomous Cars to Operate Safely

Velodyne Lidar (Nasdaq: VLDR, VLDRW) ushered in a new era of autonomous technology with the invention of real-time surround view lidar sensors. Velodyne, a global leader in lidar, is known for its broad portfolio of breakthrough lidar technologies. Velodyne’s revolutionary sensor and software solutions provide flexibility, quality and performance to meet the needs of a wide range of industries, including robotics, industrial, intelligent infrastructure, autonomous vehicles and advanced driver assistance systems (ADAS). Through continuous innovation, Velodyne strives to transform lives and communities by advancing safer mobility for all.