One of the most foundational building blocks of any modern autonomous system is the perception module. This helps any machine interpret information from the real world through sensors such as cameras, lidar and radars. In recent years, advances in deep learning have spawned an entirely new generation of perception algorithms that are developed by the huge amounts of data captured by these sensors.

Deepen plays directly into this technology trend, providing AI-powered software tools and services that help process sensor data efficiently and accurately. Deepen helps companies curate, annotate and validate real-world, multi-sensor data for perception development.

To learn more about Deepen and how its solutions help autonomous development, we connected with Andrew Lee, Vice President of Business Development at Deepen.

AL: Most modern perception systems using deep learning techniques require vast amounts of labeled images from various sensors such as cameras, lidars and radars. These images help train their algorithms. Raw images from these sensors are labeled, or assigned semantic meaning, in order to teach machines to understand what key objects are, such as vehicles, pedestrians, traffic signs and more. With that understanding, machines can then make decisions on how to navigate around their surroundings.

AL: Depth sensors, such as lidars, provide another dimension of information that is especially useful for many perception models out there. This information is not natively available from cameras today. In our view, most companies developing advanced autonomous systems adopt multimodal sensor suites that include lidars. This is especially true for autonomous vehicle (AV) makers.

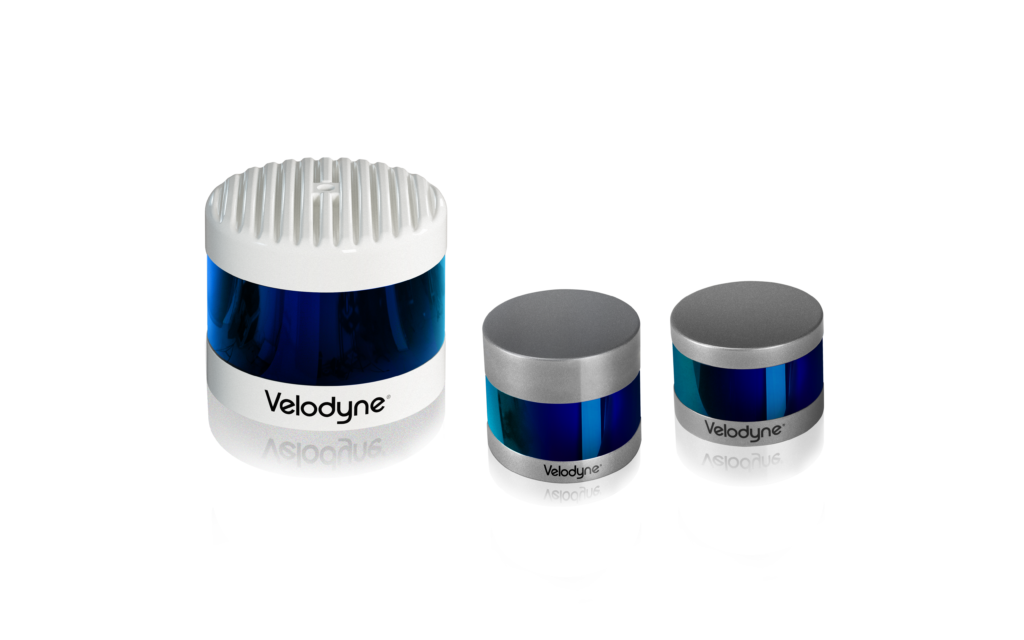

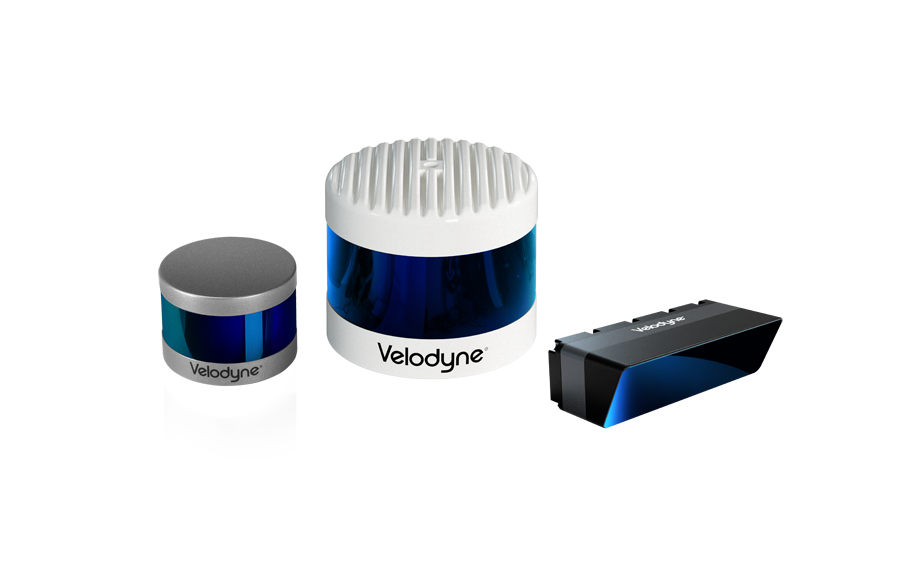

AL: Most of our AV clients use Velodyne lidars to collect data for perception model training. We offer a buffet of tools, services and IP to help them with that development, customized to their pipelines which are often non-standardized.

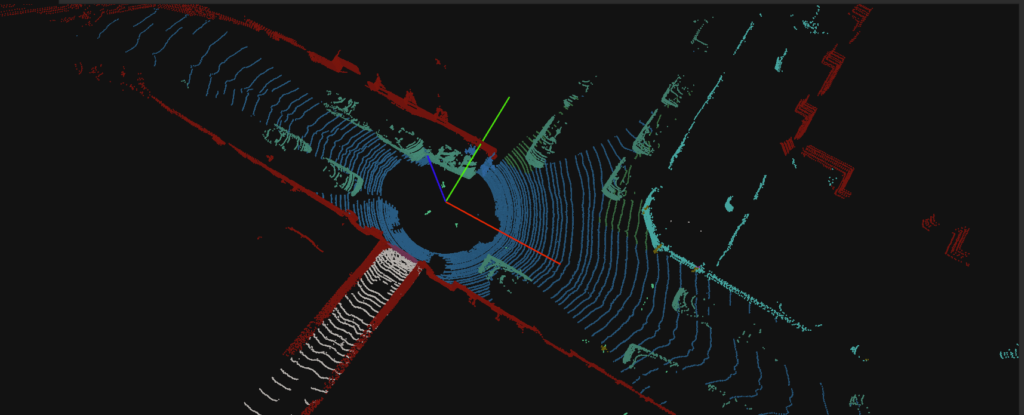

Our tools can ingest and visualize Velodyne lidar point clouds together with data from other sensors such as cameras and radars with spatial and temporal accuracy. Users can then assign semantic labels to the fused data at scale, the output of which can be used for model training or system output validation.

We can be considered as a perception development partner and have at times helped our customers with other parts of the perception stack as well. This of course does not include building lidar sensors 😊.

AL: We continue to see lidars playing a key role in sensor stacks for most AV makers. We believe penetration will continue especially when lidars are becoming more economical and more models are being introduced to the market by companies such as Velodyne. We have been handling lidar data for years and have developed a lot of techniques to address its properties. We believe enough technology has been built collectively to push this sensor modality further into AVs and other applications – smart cities, agriculture, delivery, robotics and more. Many of our customers use Velodyne lidars already, a trend we’ll continue to see in the foreseeable future.

Velodyne Lidar (Nasdaq: VLDR, VLDRW) ushered in a new era of autonomous technology with the invention of real-time surround view lidar sensors. Velodyne, a global leader in lidar, is known for its broad portfolio of breakthrough lidar technologies. Velodyne’s revolutionary sensor and software solutions provide flexibility, quality and performance to meet the needs of a wide range of industries, including robotics, industrial, intelligent infrastructure, autonomous vehicles and advanced driver assistance systems (ADAS). Through continuous innovation, Velodyne strives to transform lives and communities by advancing safer mobility for all.