Autonomous vehicle companies use simulation and machine learning to train, test and validate their self-driving systems for roadways. Even with massive data capture efforts, real-world data is not enough to cover all possible driving scenarios. Synthetic data fills the gap by modeling roadway environments, complete with people, traffic lights, empty parking spaces, and more.

ANYVERSE is a synthetic data provider that supports a wide range of applications, including autonomous vehicle (AV) and advanced driver assistance system (ADAS) development. The parent company behind ANYVERSE is Next Limit, a Spanish software company that is an Oscar-winning pioneer in 3D simulation and rendering.

ANYVERSE can model any driving situation using geographically-stylized urban, suburban, rural and highway environments. Their solutions cover the full range of real-life conditions, including lighting, weather, varying physical conditions, color ranges, and vehicle and pedestrian behaviors.

We interviewed Ángel Tena, CTO of ANYVERSE, to learn how ANYVERSE helps autonomous system developers mirror the wide-ranging scenarios of reality in sumlation and machine learning.

Tena: It is very well known in the machine learning world that to train a model properly, in addition to the quantity of data, the quality and diversity of the data are also important. We have a clear example in the automotive industry. Simply having thousands of driven miles where nothing special happens is worthless from the machine learning model point of view. In this case the model will start overfitting very quickly. In ANYVERSE, diversity and quality are the two most important features, allowing us to tackle this problem. ANYVERSE includes a high-fidelity spectral rendering system that allows us to create images that are very close to real world images. It also includes a scene procedural system that allows us to create a large number of different scenes automatically.

Tena: In the case of the image sensor, we simulate all the components that are part of the acquisition process. In our rendering system, light is modeled using wavelengths, just as in the real world. This allows us to simulate different modules inside the sensor in a very precise way. For example, QE (Quantum Energy) curves, uniform and non-uniform noise, and more.

In the case of lidar, we take a geometric approach and leverage the use of our physically-based definition of materials to try to mimic the returns from a real lidar. For instance, the roughness of the material determines how strong the return from the lidar sensor is. In our model, there are some limitations we are now removing. For instance, the ability to simulate divergence. We are very close to simulating the characteristic of any lidar within our system.

Tena: There are many parameters that can be adjusted to provide dataset customization. Usually we start off with the type of scene: urban, suburban, highway, parking lot or other scenarios. Then we can continue with the position and the type of dynamic objects that will populate the base scene: vehicles, pedestrians, props, etc. Environmental conditions, like weather, time of day and sky cover, can be also be defined.

Additionally, we have developed a procedural scene-generation engine that is able to automatically recreate any plausible driving scenario, based on standard sources like OpenStreetMaps and OpenDrive. This module can dramatically expand the number of different scenarios and dynamic conditions.

Tena: Based on our ray tracing technology, we cast a ray from the lidar sensor and compute the geometric intersection with the scene. We evaluate the material properties and the surface normal at the intersection point to filter valid points (valid returns). For every intersection, we provide the 3D position of the point (either in device or in world coordinate system), the distance from the lidar sensor, the class of the object, and the material of that object. We can implement any sweeping pattern just providing some parameters, for instance points per second, vertical/horizontal field of view, vertical/horizontal angular resolution, and rotation rate. We can also combine lidar and camera optics to simulate advanced sensors with both capabilities.

Tena: Yes, ANYVERSE can definitely be used for many other applications in addition to AV and ADAS. In general, we can always help when there is a perception system for data acquisition. UAVs are used broadly nowadays for visual inspection and detection of defects in different areas. For instance, the detection of distresses in the airport’s runways, defects in power line insulators or any other infrastructure that requires a lot of inspection cycles.

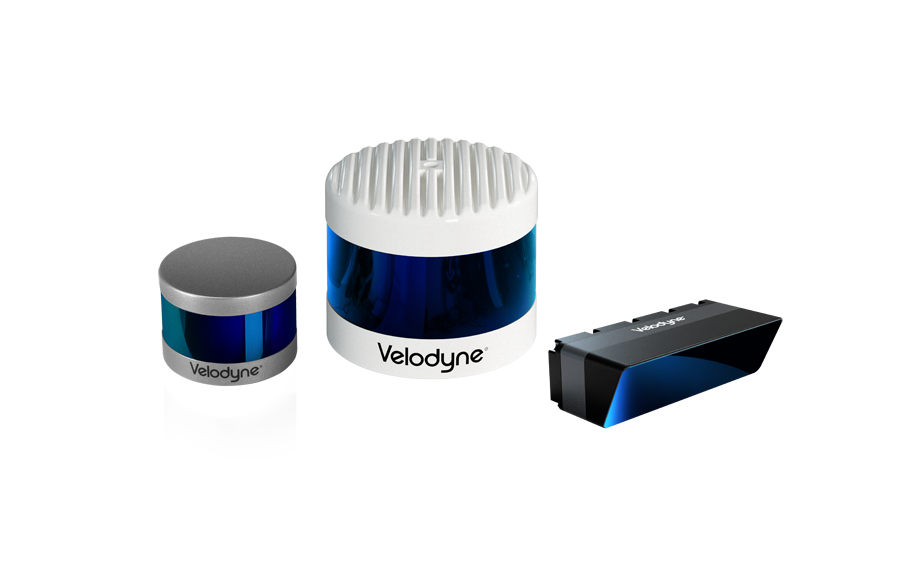

Velodyne Lidar (Nasdaq: VLDR, VLDRW) ushered in a new era of autonomous technology with the invention of real-time surround view lidar sensors. Velodyne, a global leader in lidar, is known for its broad portfolio of breakthrough lidar technologies. Velodyne’s revolutionary sensor and software solutions provide flexibility, quality and performance to meet the needs of a wide range of industries, including robotics, industrial, intelligent infrastructure, autonomous vehicles and advanced driver assistance systems (ADAS). Through continuous innovation, Velodyne strives to transform lives and communities by advancing safer mobility for all.